What Is A Multimeter?

A multimeter is a versatile electronic testing device used to measure electrical quantities such as voltage, current, and resistance. It combines several measurement functions into a single instrument, allowing users to troubleshoot, test, and diagnose electrical circuits and components.

In the vast and dynamic world of electronics, where voltages surge, currents flow, and resistance play a critical role, there exists a humble yet indispensable tool—the multimeter. This compact device, often hailed as the Swiss Army knife of electrical measurements, serves as a guiding light for engineers, electricians, technicians, and hobbyists alike. With its ability to provide accurate readings of various electrical quantities, the multimeter stands as an unwavering ally in the pursuit of understanding, troubleshooting, and mastering electrical systems. Whether you’re an engineer, technician, or hobbyist, a multimeter is a must-have device that empowers you to work with electrical systems, ensuring proper functioning and enabling you to unleash your creativity safely and effectively.

In this article, we’ll explore the ins and outs of multimeters, from their basic functionalities to advanced techniques. We’ll discuss the different types of multimeters, their features, and provide practical tips and best practices for accurate measurements. So, if you’re ready to unlock the full potential of your multimeter and elevate your electrical knowledge, let’s dive in.

Why Are Multimeters Important?

As mentioned earlier, a multimeter is essentially a must-have if you are performing any type of electrical or electronics world. Nobody likes blanket statements like that, so why would we go as far to say that? Well, a multimeter provides many benefits to the user, such as:

- Functionality: Multimeters combine numerous measurement functions down into one tool. This eliminates the user to need several different, more specific, tools – keeping their initial cost and cost of ownership as low as possible.

- Precision: Its importance lies in its ability to measure various electrical parameters accurately and precisely, ensuring reliable diagnostics and troubleshooting. With its wide range of measurement functions and high precision, a multimeter enables users to obtain precise data regarding voltage, current, resistance, continuity, and more. This accuracy is essential for identifying electrical issues, assessing circuit performance, and making informed decisions during repairs or installations.

- Safety: Additionally, multimeters contribute to ensuring safety in electrical work. By allowing professionals to measure voltage levels and check for continuity or resistance, multimeters aid in identifying potential hazards, such as live wires or short circuits. This capability significantly reduces the risk of electrical accidents and promotes a safer working environment.

- Versatility: Versatility is another crucial part of multimeters. They can be utilized across a wide range of applications, including residential electrical systems, automotive diagnostics, industrial maintenance, electronic circuit testing, and renewable energy systems. Their adaptability to various environments and electrical setups makes them versatile tools for professionals working in many different fields.

What Are The Common Types Of Multimeters?

The two primary types of multimeters are either analog multimeters or digital multimeters. The distinction between these two types of meters is how the measurement reading is displayed on the unit.

As you could imagine, over the 100+ year history of multimeters, numerous different types of multimeters have been developed. All of the different types out there, the two primary types of multimeters in use are:

- Analog Multimeters

- Digital Multimeters

- Handheld Multimeters

- Benchtop Multimeters

- Smart Multimeters

The primary difference between the two is how the unit displays its measurements.

Each type of multimeter has its own advantages and disadvantages. Nowadays, most people use digital multimeters because they’re more popular and have more advantages. The good things about digital multimeters are usually more important than the disadvantages.

A further common classification of multimeters is the type of construction of the multimeter. Over the years, multimeters have taken many sizes and shapes, but the two primary types of multimeter construction are:

- Handheld Multimeters

- Benchtop Multimeters

The distinction here is based on how portable the instrument is.

We will be diving into the differences between analog and digital multimeters as well as handheld and benchtop multimeters in a later article. You will learn all about the differences of each and the pros and cons relative to each other.

Introduction To The Definitive Guide To Using Multimeters

While this information above does a great job covering the basic understanding of what a multimeter is, there are still a lot of questions that need to be answered to become an expert in using multimeters:

- What is a Multimeter?

- What is the History of the Multimeter?

- What Does a Multimeter Measure?

- What is an Analog Multimeter?

- What is a Digital Multimeter?

- Should I Use an Analog or Digital Multimeter?

- What is the Difference Between a Handheld and Benchtop Multimeter?

- What is a True-RMS Multimeter?

- What Features and Accessories Should I Look For in a Multimeter?

- How Accurate is Your Multimeter and Why Does it Matter?

- What Are Multimeter Ranges and Why Do They Matter?

- What Do I Consider When Buying a Multimeter?

- How Do I Use a Multimeter?

- How Do I Properly Maintain a Multimeter?

- How Do I Calibrate a Multimeter?

- What is the Future of Multimeters?

We’ll spend time on each of these areas throughout the rest of this guide, but we wanted to introduce it here because it offers a look at how we structured the guide as a whole.

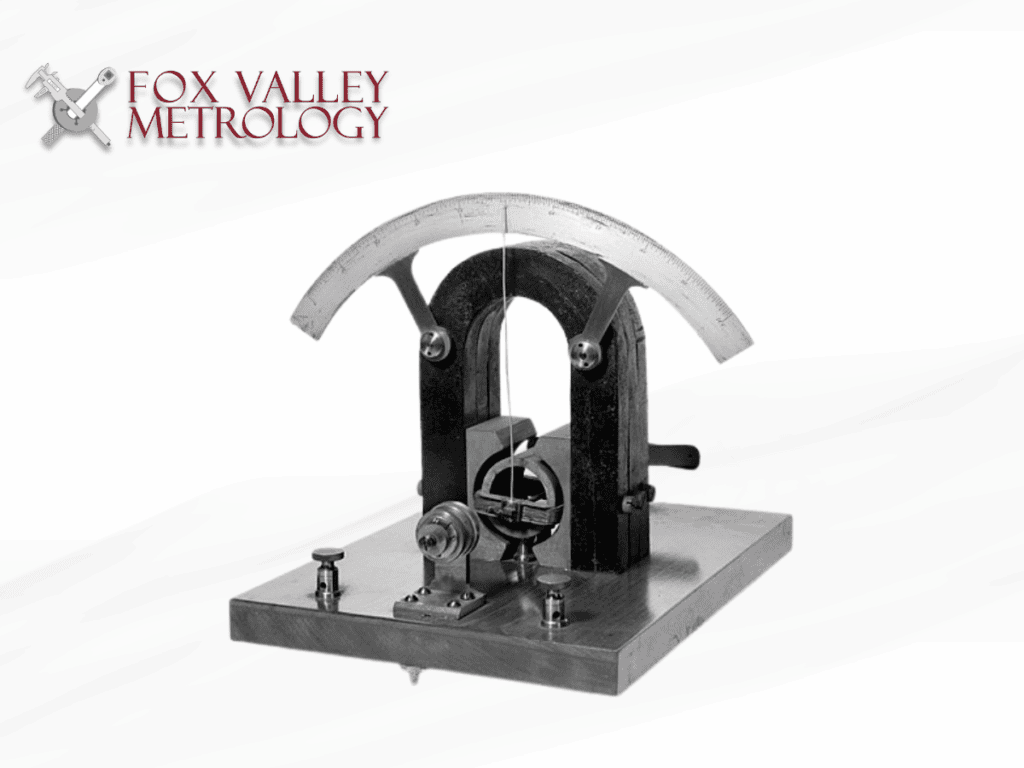

What Is The History Of The Multimeter?

The multimeter was invented in 1920 and has undergone numerous advancements since.

In 1920, the first multimeter, known as the AVOmeter, was invented by Donald Macadie, consolidating multiple measuring functions into a single device. Over the years, multimeters evolved from analog to digital technology, offering greater precision, reliability, and user-friendliness. The introduction of microprocessors further enhanced their capabilities, enabling complex calculations, data storage, and computer communication.

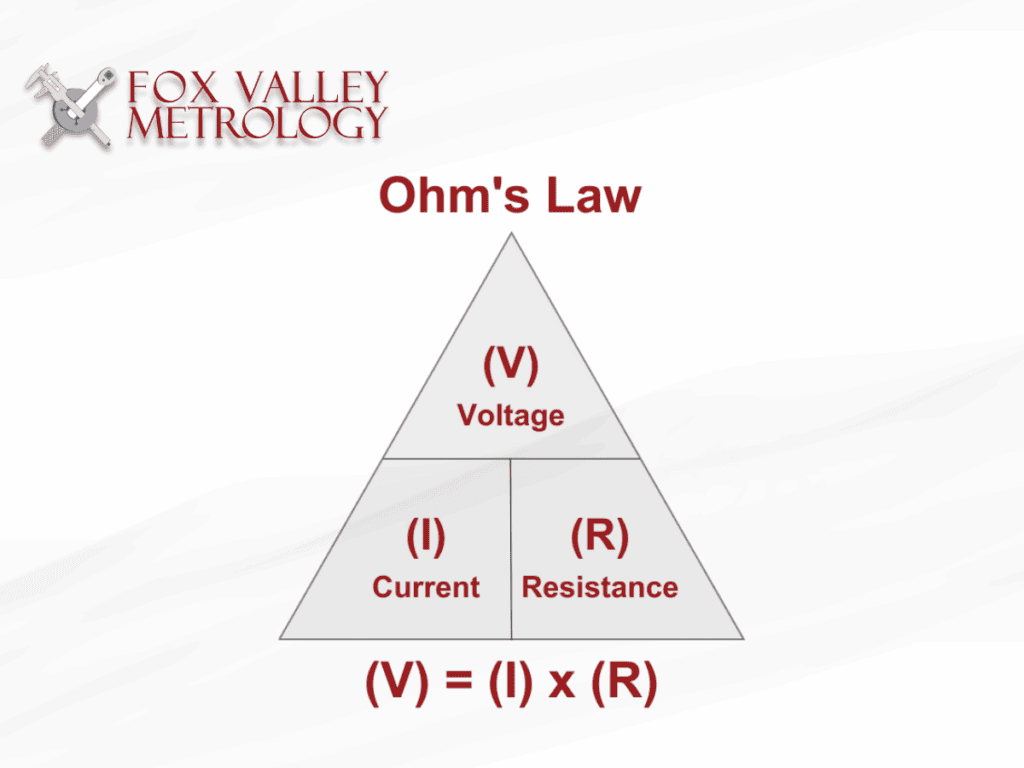

What Does A Multimeter Measure?

A multimeter measures common electrical measurements, most notably voltage (volts), current (amps) and resistance (ohms).

Multimeters are versatile electrical measurement instruments that quantify various electrical parameters within circuits. They measure values such as voltage, current, resistance, capacitance, and frequency, providing crucial insights into the behavior and health of electronic components and systems. It is this extremely wide range of measurements that has made the multimeter the industry standard for all standard electrical measurement.

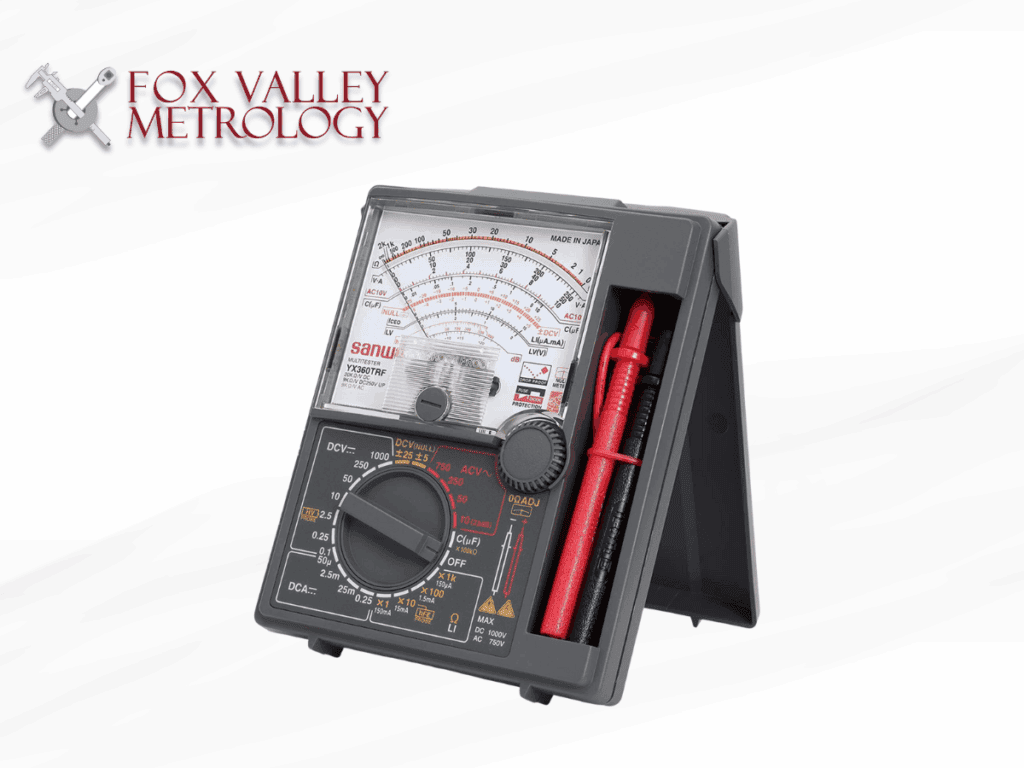

What Is An Analog Multimeter?

An analog multimeter is a simple measuring device for basic electrical parameters (such as voltage, current and resistance) which contains a dial with numbers and a needle that moves to show measurements.

An analog multimeter is the “old faithful” of the electrical measurement world. While it may not be the flashiest instrument on the planet, it is certainly a workhorse. It can tell you things like how much voltage is in a circuit or how much resistance is in a wire. You just need to connect the right wires to the right places and read the number where the needle points.

It’s a basic, easy-to-use gadget that helps you understand and measure electricity without any fancy digital stuff. Sort of like a tape measure versus a laser distance meter – you know that it will just work.

What Is A Digital Multimeter?

A digital multimeter (or DMM for short) is a high-tech tool used to measure electricity. It contains a digital readout for measuring things like voltage, current, resistance, capacitance, frequency, and more.

Unlike an analog multimeters that uses mechanical movements and analog scales, DMMs use digital displays, typically consisting of a liquid crystal display (LCD) or LED screen, to provide precise numerical measurements.

DMMs can also offer additional features such as data logging, peak hold, continuity testing with audible beeps, diode testing, temperature measurement with external probes, and more. Some advanced DMMs may even have built-in wireless connectivity or USB ports for data transfer and integration with computer software.

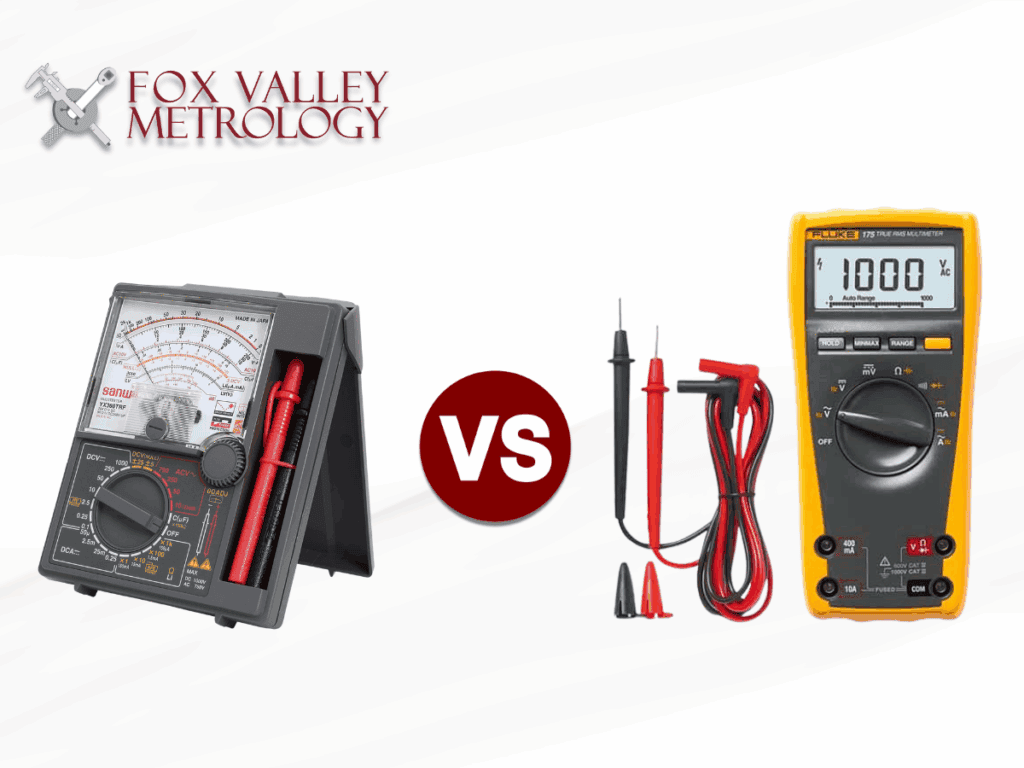

Should I Use An Analog Or Digital Multimeter?

Digital multimeters are far and away the most common multimeter these days, due to their higher accuracy and convenience. However, analog multimeters may still serve a purpose in your application, especially if the signal you are measuring fluctuates.

With most new multimeters being manufactured with a digital display, it may be tempting to assume that it is a slam-dunk decision to opt for a digital multimeter over an analog multimeter. After all, the world is moving to a more and more digitized.

In most cases, that would be correct. However, there are some important advantages and disadvantages to take into account when deciding which to use.

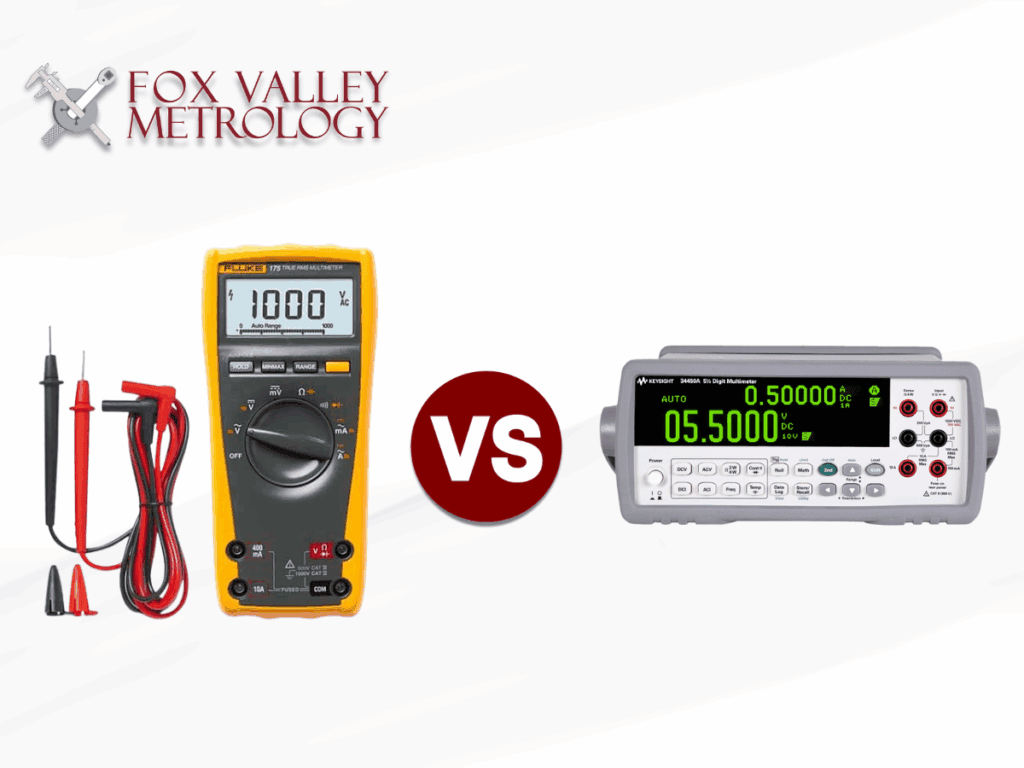

What Is The Difference Between A Handheld And Benchtop Multimeter?

There are two primary constructions of multimeters, handheld and benchtop multimeters. While handheld multimeters are extremely portable and offer the ultimate in convenience, benchtop multimeters are often far more accurate and provide many additional features and functionality.

Outside of making the decision to choose an analog or digital multimeter, the next most important decision is to choose between benchtop and handheld multimeters.

There is no doubt that handheld multimeters are far more common than benchtop multimeters. Given that the multimeter is something of the general workhorse of all electrical measurements, it makes sense that the more portable option would be more popular.

However, there are plenty of scenarios where you will need a far more specialized piece of equipment – for example when extremely accurate measurements are necessary. That is where the benchtop multimeter comes into play.

What Is A True-RMS Multimeter?

A True-RMS (Root Mean Square) Multimeter is a device used to measure electrical values such as voltage, current, and resistance. Unlike standard multimeters, it computes the square root of the mean of the squares of values, making it precise even for non-linear loads – most commonly Alternating Current (AC).

A True-RMS multimeter uses the Root Mean Square (RMS) method of calculating a value. RMS differs from average readings because it provides an accurate representation of the effective value of alternating current (AC).

In simpler terms, while average reading multimeters may give inaccurate readings for complex waveforms, the True-RMS will always provide the accurate electrical magnitude.

It is one of the few electrical measuring devices that can do so, outside of expensive oscilloscopes. As a result, it has become one of the most common multimeter types out there today.

What Features and Accessories Should I Look For In A Multimeter?

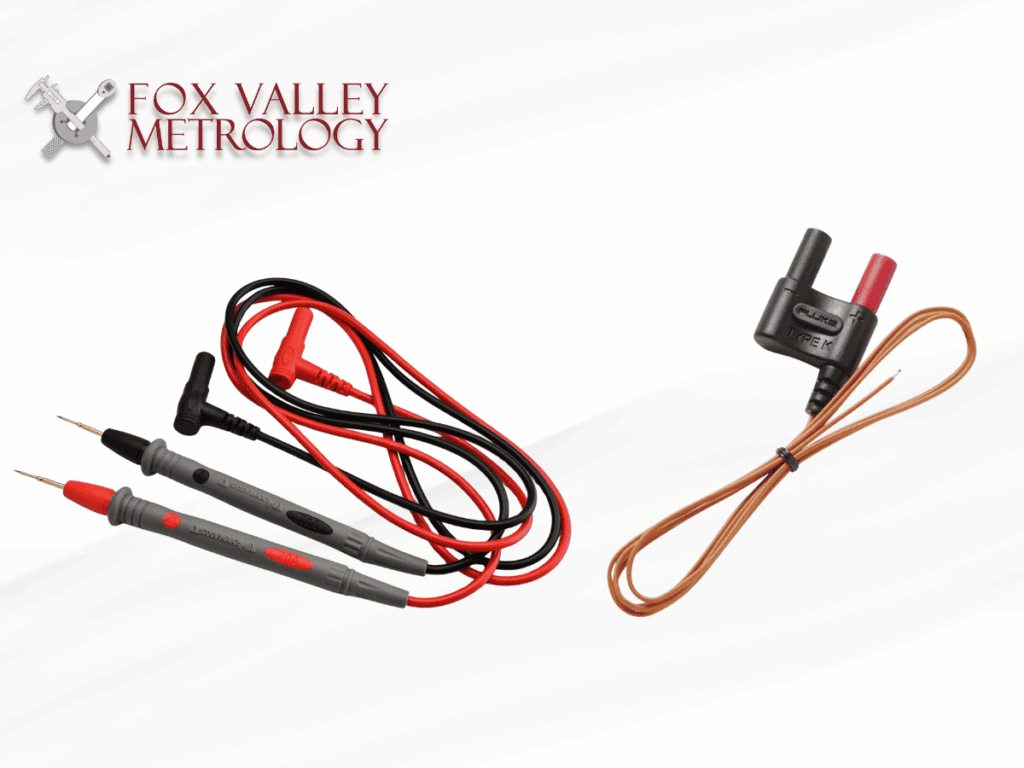

The most common multimeter accessory you will need to consider is the type of multimeter leads you will be using. The primary multimeter feature is if the multimeter comes with auto-ranging functionality. Additionally, another major consideration is if the multimeter has any data output options, and if so what type of data output it can provide.

After deciding between an analog or digital multimeter and whether it should be a benchtop and handheld multimeter, you should consider a few common accessories, features and options that many different types of multimeters can provide:

- Auto-Ranging Capability

- Multimeter Lead Types

- Multimeter Data Output Options

How Accurate Is Your Multimeter And Why Does it Matter?

The most common portable digital multimeters have an accuracy of ±0.5% for DC voltages. Benchtop multimeters are commonly specified with an accuracy of ±0.01%, or even better.

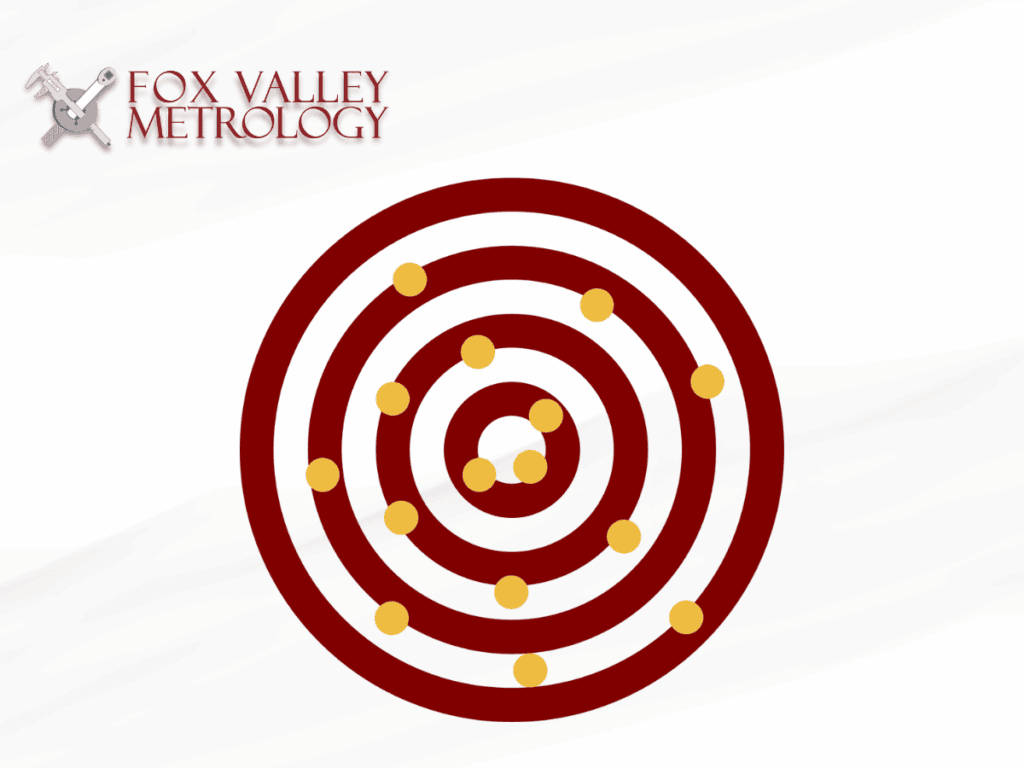

The accuracy of a multimeter is about how close the measured values are to the true values of the electrical parameters. It’s crucial for any measuring tool because it tells us how reliable and trustworthy the measurements are.

It is easiest to think of this as a target. The “true value” of the target is the center bullseye. The “measured values” would be like graphing where all your arrows hit around that bullseye. The accuracy of your shot is how far those arrows are hitting from the bullseye.

Multimeters typically come with a specified accuracy percentage or tolerance indicated by the manufacturer. This accuracy value indicates the maximum permissible deviation between the displayed reading on the multimeter and the actual or true value of the measured parameter. For example, a multimeter with a ±1% accuracy means that the displayed value can deviate by a maximum of 1% from the true value.

What Are Multimeter Ranges And Why Do They Matter?

Multimeters come with ranges built into the unit to help protect it from overloading the multimeter. It is the best practice to use the lowest range setting for the measurement, without overloading the multimeter. This will allow you to get the most accurate measurement.

Common ranges for digital multimeters are:

- DC Voltage: 200mV, 2000mV, 20V, 200V, 600V.

- AC Voltage: 200V, 600V.

- Current: 200µA, 2000µA, 20mA, 200mA, 10A

- Resistance: 200Ω, 2000Ω, 20kΩ, 200kΩ, 2000kΩ

All multimeters come with different measurement ranges to accommodate a wide range of values that you might encounter when working with electronic components and circuits. These ranges are selectable on the multimeter itself before performing the measurement.

The addition of ranges is to accommodate for accuracy, sensitivity, protection, resolution, avoiding overload and speed of measurement.

What Do I Consider When Buying A Multimeter?

Choosing the right multimeter for your job is essential to get accurate and reliable measurements. You will need to consider several factors to make sure it fits your requirements and helps you throughout your work.

When buying a multimeter, you will need to consider:

- Types of Measurements

- Measurement Ranges

- Accuracy & Resolution Requirements

- Portability

- Anticipated Working Conditions

- Display

- Additional Features

- Construction

- Budget

- Probe Types

- Data Capture Options

- Brand Reputation

- Model Reputation

How Do I Use A Multimeter?

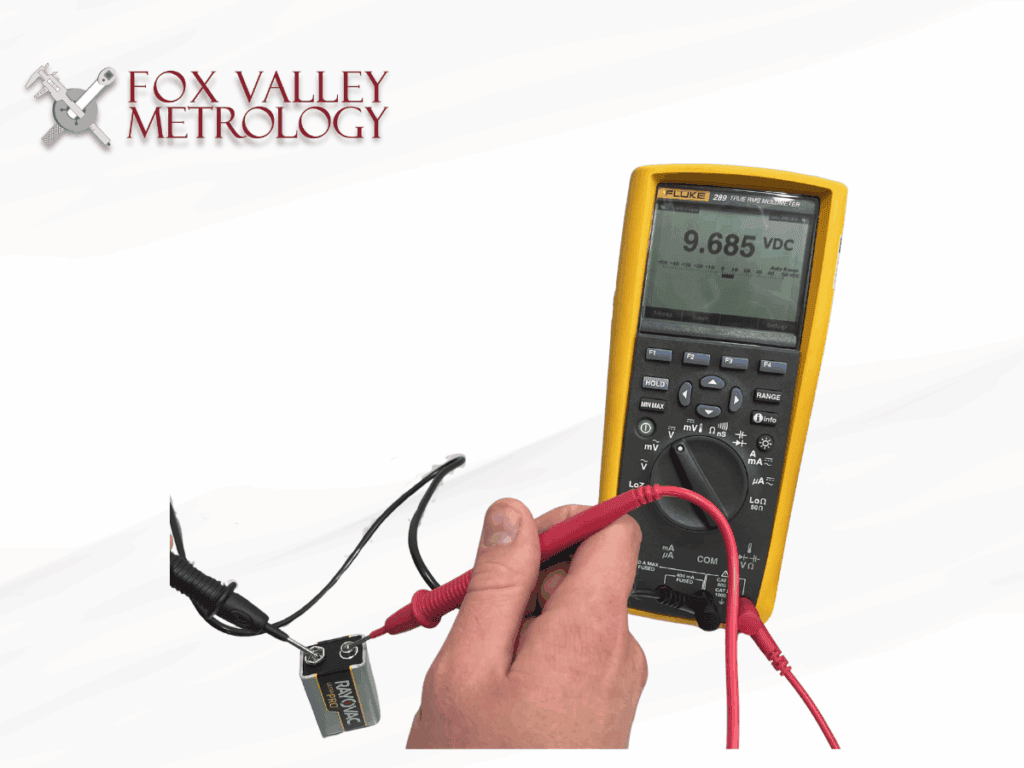

To use a multimeter, start by selecting the appropriate measurement mode (e.g., voltage, current, resistance) and range. Connect the probes to the circuit/component being measured, ensuring proper polarity. Read the displayed value on the multimeter’s screen, and if necessary, switch to a different range for more accurate results.

Using a multimeter effectively is an indispensable skill for anyone working with electrical and electronic systems. Whether troubleshooting a malfunctioning device or verifying circuit parameters, the accuracy and efficiency of your measurements hinge on your ability to operate this tool correctly.

This handy device can seem a bit perplexing at first glance, but once you get the hang of it, you’ll be testing, measuring, and diagnosing electrical issues like a pro. The good news is, the process of using a multimeter is quite simple.

In this post, we’ll delve into the nuances of multimeter operation, offering step-by-step guidance to ensure you get the most out of every reading. Let’s enhance your proficiency with this crucial instrument!

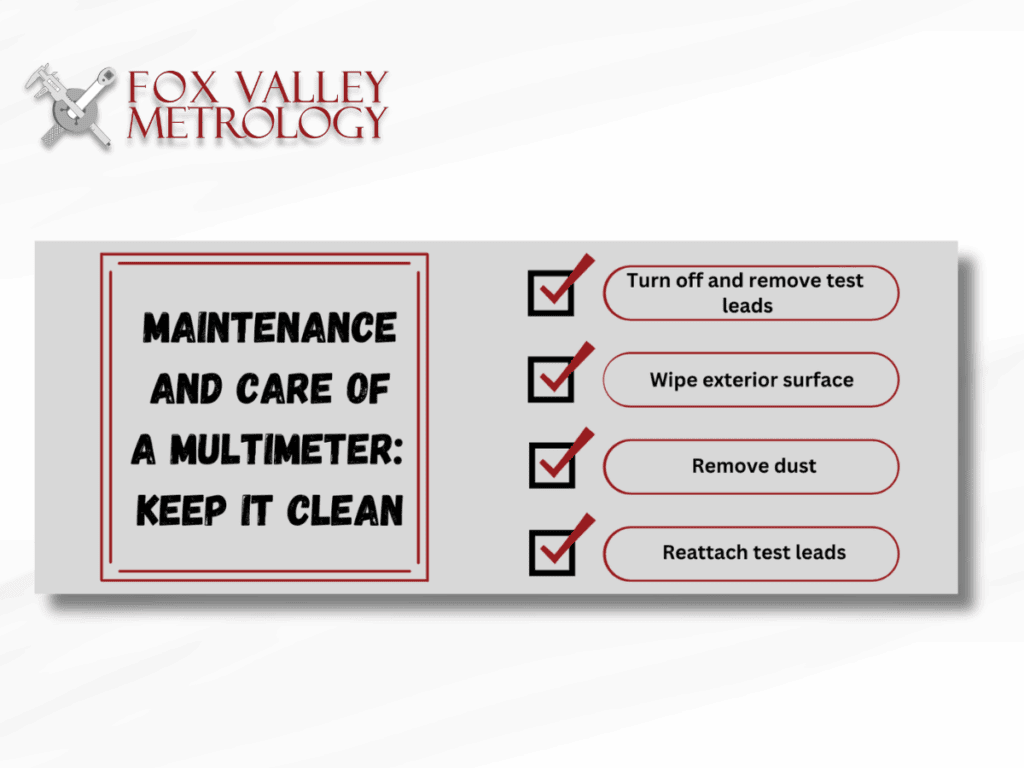

How Do I Properly Maintain A Multimeter?

Multimeters aren’t exactly the cheapest tool out there. You are going to want to get the most out of the investment you make. With proper care, a multimeter can easily last you decades.

To maximize the longevity and accuracy of your multimeter, proper maintenance and care are crucial. Regular maintenance not only helps it last longer but also ensures you get consistent and precise measurements. This is vital for accurate troubleshooting and analysis.

Additionally, regularly calibrating your multimeter helps maintain its accuracy and ensures that it continues to provide reliable readings over time.

By investing a little time and effort into caring for your multimeter, you not only safeguard your valuable tool but also ensure that it remains a trustworthy companion in your electrical work, enhancing your productivity and enabling you to tackle challenges with confidence.

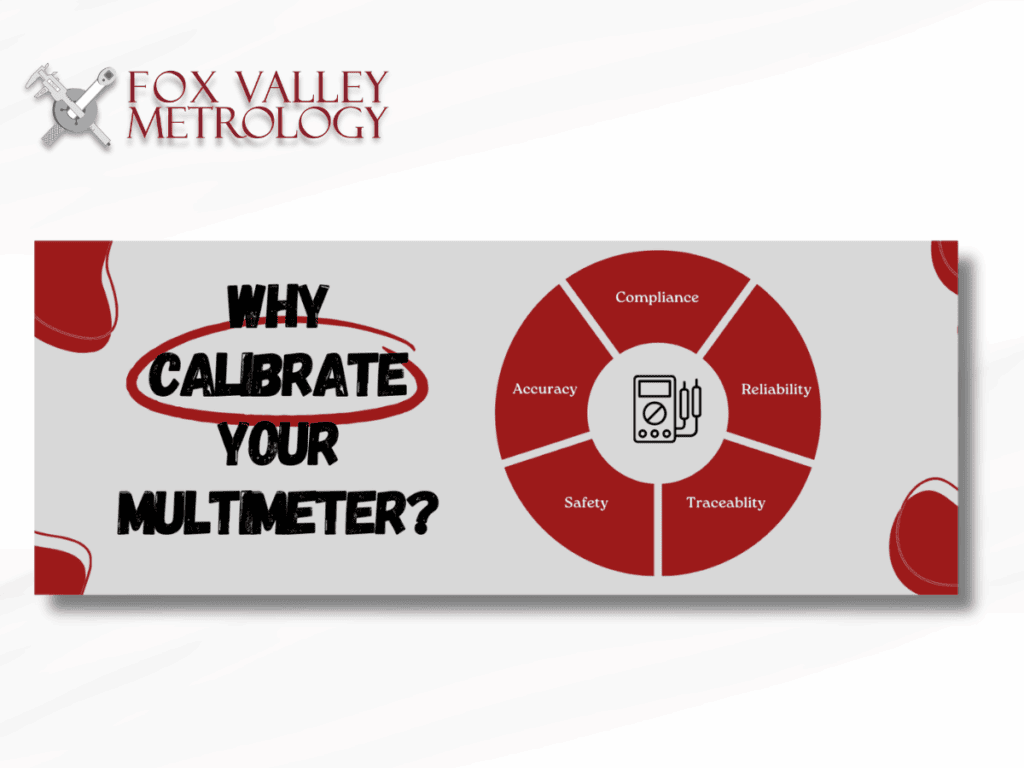

How Do I Calibrate A Multimeter?

Over time, multimeters can drift from their original calibration due to factors such as temperature variations, mechanical stress, and component aging. By calibrating your multimeter regularly, you can have confidence in the precision of your measurements and make informed decisions based on the data obtained.

Regular calibration of your multimeter ensures that the multimeter’s readings are reliable and trustworthy. The multimeter calibration process is:

- Gather The Necessary Equipment

- Read The Manufacturers Instructions

- Perform Pre-Calibration Checks

- Zero the Multimeter

- Perform the Multimeter Calibration

- Adjust if Necessary

- Finalize the Calibration

Essentially, calibration is the process of comparing its readings with a known reference value or a certified standard. These readings are compared against the multimeter’s specifications. If the reading is within the multimeter’s allowable variance from the known reference value, the multimeter is in tolerance. If the variance of the reading from the known value is greater than the allowable variance, the multimeter is out of tolerance and adjustment, repair or replacement may be necessary.

What Is The Future Of Multimeters?

Multimeters are some of the most advanced measurement instruments on the planet. However, as technology continues to advance at an unprecedented pace, it begs the question: what does the future hold for the multimeter?

It is safe to assume that the future of multimeters will follow the same trends we are seeing overall in measurement. Some relatively predictable improvements may be:

- Smart Multimeters

- Artificial Intelligence in Multimeters

- Advancements in Multimeter Accuracy and Precision

- Integration of Advanced Features

- Non-Contact Measurement

- Wireless Connectivity and Cloud Integration

- Enhanced User Interfaces and Usability

- Multimeter Ecosystems

As fast as multimeters have advanced in the past century, its intriguing to consider where they may go from here.

Ready to dive into the world of multimeters? Let us begin by understanding where multimeters began and will answer the question: What is the History of the Multimeter?